From atoms to quarks, to the cosmos – Internet of Things helps lab explore the origins of the universe

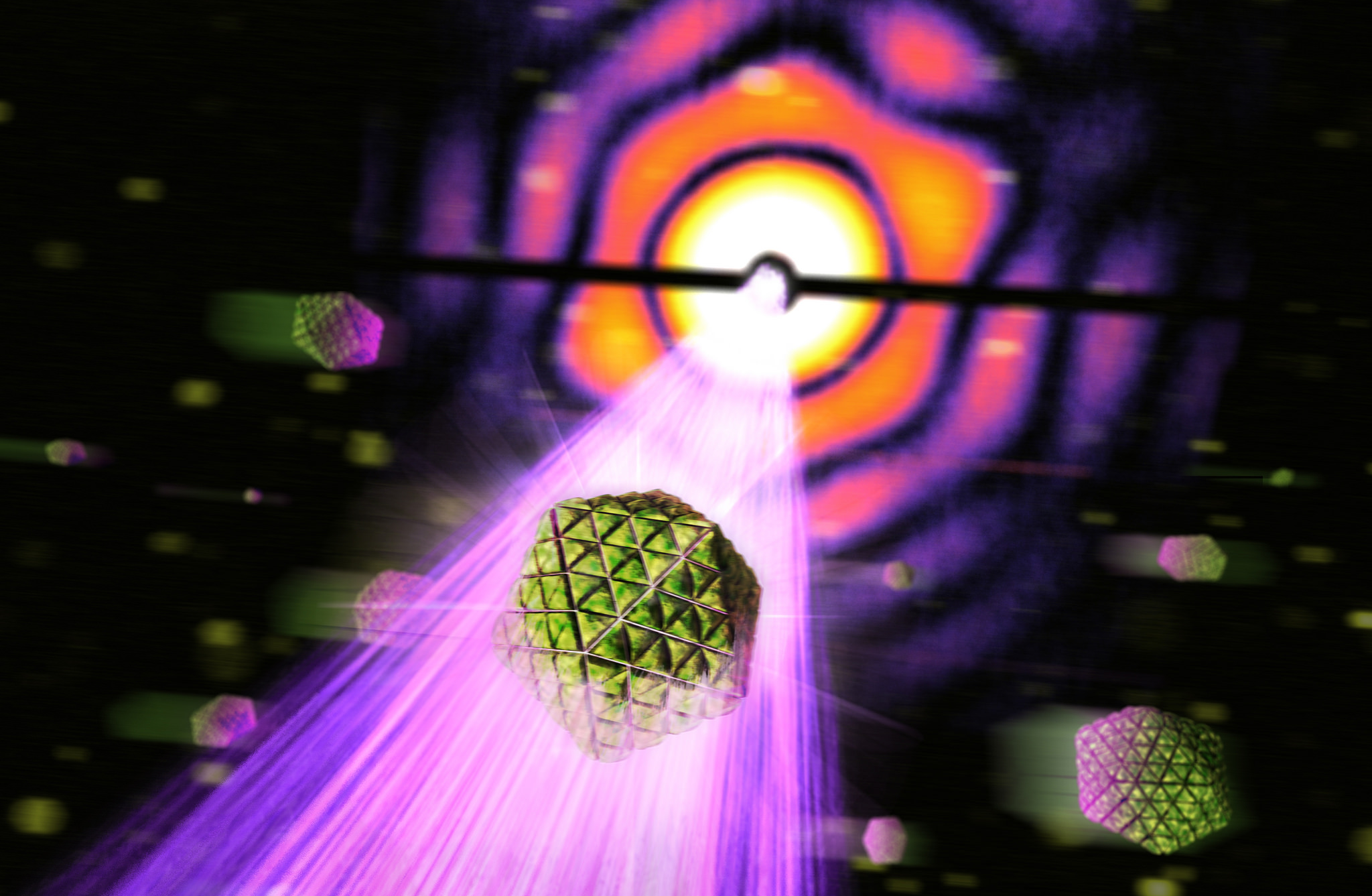

Nestled in the hills west of Stanford University lies the longest and straightest building in the world — the SLAC National Accelerator Laboratory — a two-mile long particle accelerator built in the 1960s.

Over its 50-year history, SLAC has helped scientists discover new drugs, new materials for electronics and new ways to produce clean energy. Research conducted there has revealed intimate details of atoms, led to the discovery of matter’s fundamental building blocks, and unveiled insight into the origin of the universe and the nature of dark energy.

Six scientists have been awarded Nobel Prizes for work done at the center, and more than 1,000 scientific papers are published each year based on research conducted there. Today Stanford University operates SLAC for the Department of Energy (DOE), one of the DOE’s 10 Office of Science laboratories. SLAC runs one of the world’s most powerful X-ray laser beams and generates some of the most valuable science data from across the globe.

“Since the beam is expected to run 24/7, it can be thought of as a utility,” says Marcus Keenan, manager of instrumentation and controls for SLAC’s Facilities and Operations directorate. “Researchers come here from all over the world and can wait for years to have beam access; they may only have two or three time slots to do the work they need to do.”

It’s a precious allotment of time, and if that utility goes down, the science stops. The biggest potential impact is the loss of science and discovery: “If an experiment is running, you can imagine a situation where a team who may have traveled from another country may be close to collecting data that could lead to a science breakthrough and the beam goes down, abruptly ending the experiment. In some cases they’re able to make up the time, but in some cases they have to start over.”

After decades running the facility, SLAC’s crew is finely tuned, but unexpected outages do happen. The uptime for the beam is estimated at about 95 percent, with downtimes scheduled a few times a year for planned maintenance.

To continue to improve and ensure maximum operation of this critical scientific resource, SLAC’s facilities and information technology groups are cross-collaborating to explore the Internet of Things (IoT). The internal team is working on a future plan to take data from all intelligent sensors that monitor the vast systems at SLAC and feed the data into the cloud where it can be processed, analyzed and delivered back to control engineers — so they can take action before a potential failure occurs.

“This epitomizes the value of the Internet of Things and machine learning to SLAC,” says James Williams, SLAC CIO. “The intelligent systems tell us of an issue before we use the system data to manually figure it out. This capability aligns with the strategy of the laboratory to maintain core facilities and ensure that we constantly are aware of the state of the facility’s health so that we can maximize science.”

Keenan is partnering with Mayank Malik, chief architect for SLAC computing, and Jerry Pierre, solution architect for SLAC, on this important paradigm shift for typical information technology and facilities organizations — going from “keeping things running” to ensuring that they are “enabling the science through enabling technologies.”

Controlling the variables at unprecedented scale

It’s not just stoppages that affect the science happening at SLAC. Keeping a two-mile-long, 50-year-old particle accelerator running as consistently and reliably as the day it was built is just as complicated as it sounds.

The facilities’ support systems are tightly controlled, with tens of thousands of sensors monitoring every conceivable condition. The goal is to create the most consistent environment possible — to remove every possible variable from the equation — so the results obtained from experiments conducted at the facility are as pure as can be.

It’s a critical role: Some experiments have the potential to chew up three-and-a-half gigabytes per second, and the scientists who travel to SLAC will rely on that data to conduct their research at home, sometimes post-processing data for years. Little things at the front end like power and temperature levels can inject unwelcomed variables that could skew their interpretation and foul their research.

Some of Keenan’s direct reports work from a control room that monitors the many support systems for the lab — power distribution, process control, heating and cooling, building automation, just to name some key areas. The power systems alone are a huge effort: The team monitors 350 sophisticated power meters that offer trends on roughly 100 different parameters each.

“All in all, we have hundreds of thousands of devices that are in some way involved with the control system for the facilities,” Keenan says. “Many of them are critical, because from end to end, we have to have power, and there’s a lot of stuff in between to make that happen.”

With sensors becoming so much more affordable and powerful, the facility is deploying them at a much larger scale than ever before, requiring the team to monitor tens of thousands of data points in real time and continually adjust the facility’s thresholds to keep a constant set of conditions. It’s a process that never stops, and operators go through it all, system by system, every day, looking at charts with 10 to 20 trend lines on them for patterns that may be deviating from normal. A process highly dependent on operator skill and knowledge — and one ideal for machine learning.

“Setting alarm thresholds across tens of thousands of data points is not a scalable way for us to handle this,” Keenan says. “It takes years, actually, to fine tune it all. If we set parameters too tight, we get lots of false alarms, if we set them too loose, we may not get an alarm we need.”

The visibility to take a smarter approach

Today’s volume of sensor-generated data can be overwhelming for engineers using traditional, manual processes, but there is no doubt that it can also provide enormous benefits for SLAC to help keep the massive facility constant. A pilot project targeting one of the facility’s klystron cooling systems was recently launched to make better use of these heavy data streams. Working with Microsoft Open Technologies to streamline the process, SLAC used the open source project ConnectTheDots.io to integrate the facility’s diverse array of sensor formats into the cloud with Azure Event Hubs. The data is then packaged and routed through Azure Machine Learning for near real-time analysis.

“The first thing we want to do is actually get usable information out of the data we’re already collecting,” Keenan says. “We’ve been collecting years and years of data, but how much of it can we call information?”

For the current pilot, real-time data from the klystron cooling system is run through anomaly detection algorithms. The goal is to give operators a baseline for what’s normal and what’s not under certain conditions. Rather than having control-room operators cycle through hundreds of charts to look for patterns, the machine-learning algorithms do the legwork, allowing the operators to focus on actual issues detected by the system.

“The advantage we gain is that the algorithms can look through everything at once and just return the things that we actually need to focus on, so we can take action,” Keenan says. “This is one small-scale test in a klystron cooling system, and it’s working.”

One recent example showed the team how powerful the solution has the potential to be: “We had a pump fail,” Keenan says. “The system went down, and the beam went down.”

Looking back through their data, there had been a potential indicator two days earlier — which may have been a “tattletale,” a brief anomaly that soon goes back to normal.

“I took that same data and ran it through the Azure ML service, and the algorithm identified the anomaly — it actually tagged it,” Keenan says. “So in that scenario, the system would have seen the tattletale, and we would have taken action to investigate the potential issue or planned appropriate maintenance.”

From predictive to proactive: teaching the machine

The ability to see problems before they happen is just the beginning. According to Keenan, the facility could also operate more efficiently by analyzing data to understand when maintenance actually needs to be performed, rather than expending the effort at reoccurring intervals when it may not be needed — a process that can be greatly improved with machine learning.

Another big challenge to be addressed lies in the vastly disparate systems involved in a 50-year-old facility. As things evolve, scientific programs come and go, systems are installed, upgraded and removed (or not). With so many critical systems operating across two miles and five decades, it’s nearly impossible to tell which ones depend on each other. The impact of missing an interdependency can lead to a range of unintended consequences, from additional costs, to downtime, to potentially even safety issues.

“We can have something happening a mile up the line affecting something two miles away,” Keenan says. “The better we understand the dependencies between all the components and the systems, the better we can allocate resources and plan for the next upgrade. Machine learning is going to be key for us in improving that.”

Long term, the goal for SLAC’s collaborative facilities-information technology team is to deploy machine learning more broadly, such as the millions of data points that run in and out of the controls systems. This includes data that is used to control and monitor the beam itself. A process of continued learning is envisioned, so that the “system” can understand conditions and predict anomalies with greater and greater prescience. Getting there requires a continuous process of receiving alerts from the system and feeding information back into it on the root causes of the problem.

“The full circle would be where we have operators working through a queue of anomalies and feeding intelligence back to the algorithm on what the cause of that anomaly is,” Keenan says. “That puts our operators into the role of teaching the system, instead of the current role, where we’re scrambling to try to get insight into what’s happening and reacting to a problem.”

In that way, Keenan says, his team is learning to listen to the machine, and teaching the machine to communicate back.

“That’s the end goal,” he says. “The machine is telling us what its symptoms are ahead of any problem.”

It’s all in the name of science, and keeping the beam going for the next 50 years.

Read more technology stories on Microsoft’s Internet of Things blog.

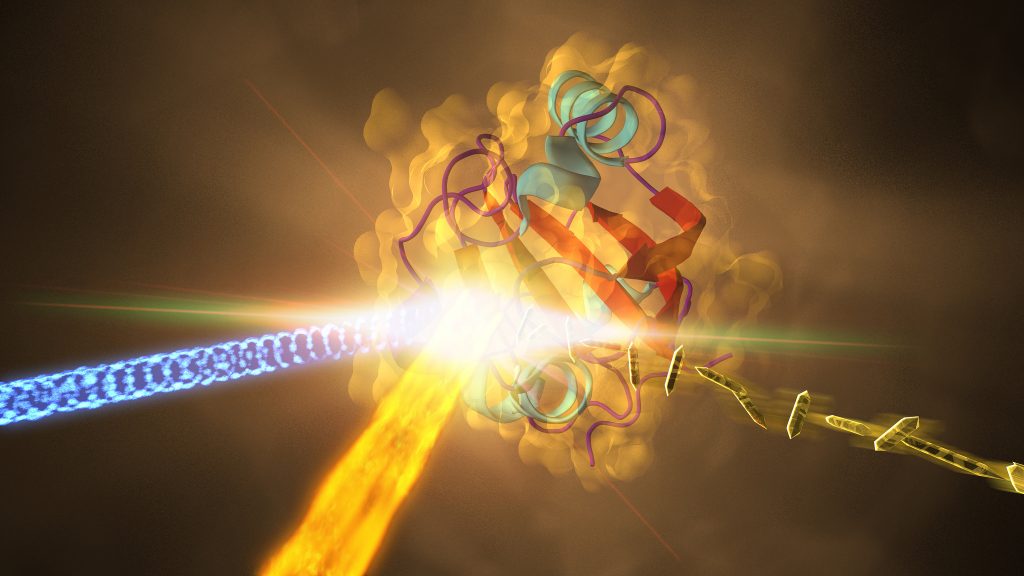

Lead image caption: This illustration depicts an experiment at SLAC that revealed how a protein from photosynthetic bacteria changes shape in response to light. (Photo courtesy SLAC.)