How PhotoDNA for Video is being used to fight online child exploitation

In the past, when someone tipped off the Internet Watch Foundation’s (IWF) criminal content reporting hotline to an online video they thought included child sexual abuse material, an analyst at the U.K. nonprofit often had to watch or fast forward through the entire video to investigate it.

Because people sharing videos of child sexual abuse often embed this illegal content in an otherwise innocuous superhero flick, cartoon or home movie, it could take 30 minutes or several hours to find the content in question and determine whether the video should be taken down and reported to law enforcement.

Last year, IWF, a global watchdog organization, started leveraging PhotoDNA — a tool originally developed by Microsoft in 2009 for still images — to identify videos that have been flagged as child sexual abuse material. Now it often takes only a minute or two for an analyst to find illegal content.

Microsoft is now making PhotoDNA for Video available for free, and any organization worldwide interested in using the technology can visit the Microsoft PhotoDNA website to find out more, or to contact the team.

“It’s made a huge difference for us. Until we had PhotoDNA for Video, we would have to sit there and load a video into a media player and really just watch it until we found something, which is extremely time-consuming,” says Fred Langford, deputy chief executive of IWF, which collaborates with sexual abuse reporting hotlines in 45 countries around the world.

“This means we can identify and disrupt online sexual abuse and help victims much faster,” says Langford.

PhotoDNA for Video builds on the same technology employed by PhotoDNA, a tool Microsoft developed with Dartmouth College that is now used by over 200 organizations around the world to curb sexual exploitation of children. Microsoft leverages PhotoDNA to protect its customers from inadvertently being exposed to child exploitation content, helping to provide a safe experience for them online.

PhotoDNA has also enabled content providers to remove millions of illegal photographs from the internet; helped convict child sexual predators; and, in some cases, helped law enforcement rescue potential victims before they were physically harmed.

In the meantime, though, the volume of child sexual exploitation material being shared in videos instead of still images has ballooned. The number of suspected videos reported to the CyberTipline managed by the National Center for Missing and Exploited Children (NCMEC) in the United States increased tenfold from 312,000 in 2015 to 3.5 million in 2017. As required by federal law, Microsoft reports all instances of known child sexual abuse material to NCMEC.

Microsoft has long been committed to protecting its customers from illegal content on its products and services, and applying technology the company already created to combating this growth in illegal videos was a logical next step.

“Child exploitation video content is a crime scene. After exploring the development of new technology and testing other tools, we determined that the existing, widely used PhotoDNA technology could also be used to effectively address video,” says Courtney Gregoire, Assistant General Counsel with Microsoft’s Digital Crimes Unit. “We don’t want this illegal content shared on our products and services. And we want to put the PhotoDNA tool in as many hands as possible to help stop the re-victimization of children that occurs every time a video appears again online.”

A recent survey of survivors of child sexual abuse from the Canadian Centre for Child Protection found that the online sharing of images and videos documenting crimes committed against them intensified feelings of shame, humiliation, vulnerability and powerlessness. As one survivor was quoted in the report: “The abuse stops and at some point also the fear for abuse; the fear for the material never ends.”

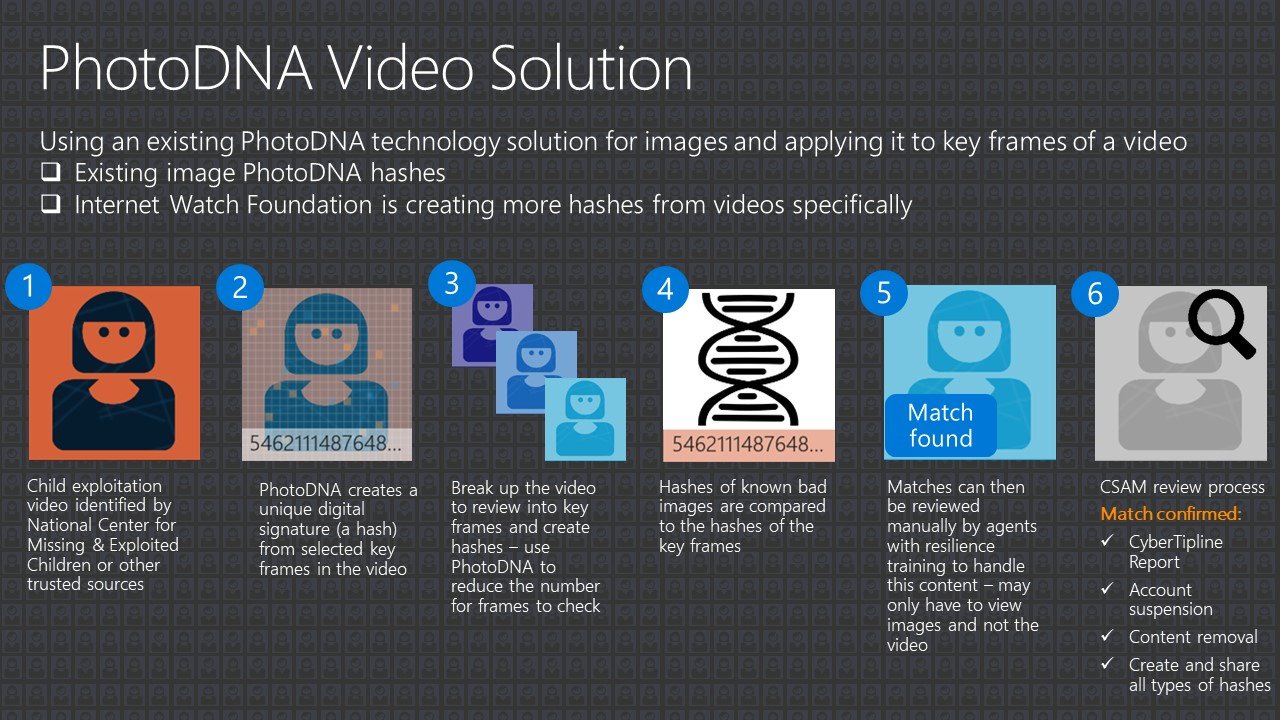

The original PhotoDNA helps put a stop to this online recirculation by creating a “hash” or digital signature of an image: converting it into a black-and-white format, dividing it into squares and quantifying that shading. It does not employ facial recognition technology, nor can it identify a person or object in the image. It compares an image’s hash against a database of images that watchdog organizations and companies have already identified as illegal. IWF, which has been compiling a reference database of PhotoDNA signatures, now has 300,000 hashes of known child sexual exploitation materials.

PhotoDNA for Video breaks down a video into key frames and essentially creates hashes for those screenshots. In the same way that PhotoDNA can match an image that has been altered to avoid detection, PhotoDNA for Video can find child sexual exploitation content that’s been edited or spliced into a video that might otherwise appear harmless.

“When people embed illegal videos in other videos or try to hide them in other ways, PhotoDNA for Video can still find it. It only takes a hash from a single frame to create a match,” says Katrina Lyon-Smith, senior technical program manager who has implemented the use of PhotoDNA for Video on Microsoft’s own services.

Organizations that are already using an on-premise version of PhotoDNA to remove illegal images will be able to seamlessly add the capability to identify videos. Microsoft is also looking for partners to test the video technique on its PhotoDNA Cloud Service.

Automated tools like PhotoDNA have made a huge difference in the fight against online child exploitation, particularly for smaller companies that otherwise wouldn’t have the capacity or know how to find illegal content on their apps and websites, says Cecelia Gregson, a senior King County prosecutor and attorney for the Washington Internet Crimes Against Children Task Force.

Gregson estimates that 90 percent of the cases she investigates now come from CyberTipline reports submitted by companies using PhotoDNA to keep their platforms clean. Under federal law, all internet and email service providers are required to report knowledge of child pornography to NCMEC.

“It’s made a huge difference…We can identify and disrupt online sexual abuse and help victims much faster.” — Fred Langford, Internet Watch Foundation

“This is not about looking at someone’s online shopping patterns or uploaded family photos. We are seeking files depicting the sexual abuse of children,” says Gregson. “We are concerned with protecting child victims, and about making sure the places you go online and your children go online are not riddled with images of child abuse and exploitation. The technology can also help us identify child sexual predators whose collections of images can cause further psychological, emotional and mental trauma to their victims.”

Since PhotoDNA and other tools became widely available, the number of reports to NCMEC’s CyberTipline has grown from 1 million in 2014 to 10 million in 2017, says John Shehan, vice president for NCMEC’s exploited children division.

“These technologies allow companies, especially the hosting providers, to identify and remove child sexual content more quickly,” says Shehan. “That’s a huge public benefit.”

Learn how to detect, remove and report child sexual abuse materials with PhotoDNA for video, or contact [email protected]. Follow @MSFTissues on Twitter.

Lead photo of Microsoft Cybercrime Center by Benjamin Benschneider.