REDMOND, Wash., July 16, 2009 — One of the biggest challenges for oceanographers is the sheer scale of their subject. The idea of monitoring currents, sunlight, tectonic plate movements and innumerable other factors over the course of decades and thousands of square miles has long seemed like a pipe dream.

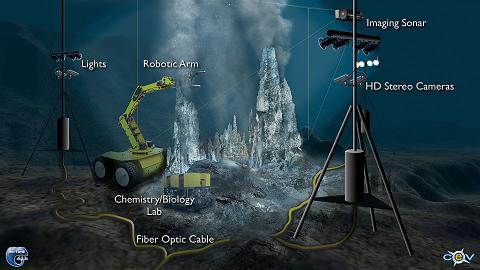

Now the National Science Foundation’s (NSF) Ocean Observatories Initiative is aiming to bring scientists closer to that objective. With hundreds of miles of fiber optic cables, sensors and cameras, “Project Neptune” will soon be retrieving enough data to fill two high-definition television broadcasts 24×7, allowing scientists all over the world to plug directly into ocean phenomena.

The problem now: How do you even begin to analyze that volume of information?

To solve this, the NSF, along with scientists at the University of Washington and the Monterey Bay Aquarium Research Institute (MBARI) are using an interesting new tool from Microsoft: Project Trident: A Scientific Workflow Workbench.

Available for free download, Project Trident allows researchers in any discipline to build their own custom computer experiments without relying on computer scientists to write them from scratch.

Companion technologies Dryad and DryadLINQ extend Project Trident’s capabilities to multiprocessor systems and large computer “clusters,” enabling those experiments to handle extremely large computations, like those required by Neptune.

But what is a “workflow workbench” anyway?

“Basically it helps to automate science,” says Roger Barga, a principal architect of Project Trident and a former researcher himself. “A lot of research today is conducted ‘in silica,’ meaning it’s on your computer. And when that’s the case, your bench experiments are really workflows.”

Workflow in science is the process of turning raw data from sensors and other sources into information that researchers can use. Barga says the issue of scientific workflow began in the late 1980s, when universities and public agencies started collaborating and sharing data across the then-nascent Internet.

“A lot of government agencies started putting their computational resources on the Web — big data sets — and making them available as a Web service,” he says. “But you needed technology to call on the Web service, to call a local tool on your machine, to call the cluster at the national supercomputer center to do the analysis, to pull the data off that, on and on. That’s when workflow really started becoming the vehicle for science.”

Over the years, technology has evolved with inexpensive sensors, more powerful computers and more advanced software, and workflow has continued to evolve along with it. Today scientists are practically drowning in data, and they need powerful systems to make sense of it all.

“In some cases it’s hard to take workflow out of the science; it has become the science,” Barga says. “Medical image processing, cancer research, all of the earth sciences, biology and the life sciences — scientific workflow is finding a niche everywhere.”

The problem, he says, is that most workflow tools are built by grad students from the ground up. And that, of course, takes time and money, typically weeks or months. There’s no guarantee that your custom-built solution will work with anyone else’s, and even simple tweaks to the experiment require more development time.

“The very seasoned scientist who does not know how to program in Perl, Java or F# has to stop, find his or her technical person and ask for a change in the program,” Barga says. “It can take a long time, and that fluid interaction is broken. You’re manipulating code in a programming language, not changing experiments at the science level.”

Project Trident was created to solve this problem. The two-year project included researchers at the University of Washington, University of Oxford, MBARI and several other prominent organizations.

This illustration shows how Project Neptune, an underwater observatory being developed by University of Washington researchers and other scientists, would collect information from the sea floor. Project Trident, developed by Microsoft Research, could help scientists manage that suddenly massive flow of data.

Project Trident begins with the Windows Workflow Foundation, which is part of Microsoft .NET and comes free with every Windows-powered machine. From there the team has built all of the tools and services that are unique to scientific workflow.

Since it uses widely available Microsoft technologies, Project Trident enables scientists to plug into many different systems and reuse pieces of experiments in new ways. As part of the offering, Microsoft External Research will also build and curate a community library of research building blocks that scientists can tap into.

“And we’re providing all of that for free, and the source code with it,” Barga says. “Programmers still have to write those initial boxes, but once they put it in that library, it’s now free for scientists to use by dragging and dropping it to the appropriate point. So a biologist can change the experiment, instead of needing a programmer.”

Project Trident also provides for what’s known as “provenance” — the ability to re-create an experiment to validate the result — along with a registered repository of workflows that lets scientists know who wrote the workflow, how long it’s been around and where its code came from.

“Weeks later if you want to know how you got a result, Project Trident tracks that so you can explain and other researchers can see,” Barga says. “And that, of course, is fundamental to the scientific method.”

According to Barga, this ability to re-create experiments and apply them to different sets of data is one of the big wins of workflow, whether it’s Project Trident or another technology.

He points to a recent study of mice that was applied to data from humans, resulting in a breakthrough in our understanding of Graves’ disease.

In that case, the scientists located the workflow from the experiment with mice, “shimmed” it to work on the human data, ran it, and realized the same process was going on in Graves’ disease.

“They literally took the workflow from one animal and applied it to the human dataset, and it was performing the same analysis,” he says. “By articulating your knowledge visually, scientists get this very powerful capability to read and share each others’ experiments and methods.”

Barga says there are many other examples. Another recent discovery had to do with oceanographic work on “upwelling” — seasonal warming in the oceans that brings nutrients up from the bottom. Here a workflow model studying the effects of warm air from the coasts was applied to another study on the intersection of two major currents in the ocean.

“They found the same thing happening,” Barga says. “One analysis of data was ‘tagged’ for the kind of analysis it does. Somebody else dragged the workflow over to another set of data and applied it, and it confirmed the same phenomenon in a different set of circumstances.”

Another example involved the University of Utah’s research into the diagnosis of cancerous tumors in the colon, which can be very difficult to identify.

It turns out there are libraries of computer analyses that can be applied to medical images to isolate the tumor from the tissue, but it takes quite a bit of experience to know what function to apply and in what order.

“So they have very experienced doctors do this, and they’re a scarce commodity,” Barga says.

To overcome that, the university has developed a classification system for the tumors and associated them with seven different workflows.

“You use the workflow associated with that class and run those series of transformations,” says Barga. “Now whatever doctor first sees the results can apply a set of workflows and actually have a very good understanding immediately of how big the tumor is, how it’s invaded the tissues and how to treat it.”

Though it was originally developed for oceanography, Project Trident is also being used in Hawaii as part of the Pan-STARRS astronomy project, which uses very high-powered digital cameras to map the entire night sky in search of objects that might pose a threat to the planet. Scientists at Indiana University are using it for atmospheric science as part of their LEAD portal, also funded by the NSF.

Next up is a seven-country cooperative study with MBARI, the Office of Naval Research, the NSF and others to try and understand the interaction of typhoons with the ocean surface. There Project Trident will be used to capture 15 to 20 on-demand workflows that will run the moment researchers see a storm formation coming into being.

“How do you make a platform for science?” asks Barga. “This is just a little workflow system, but we’re excited to see what scientists can do with it.”