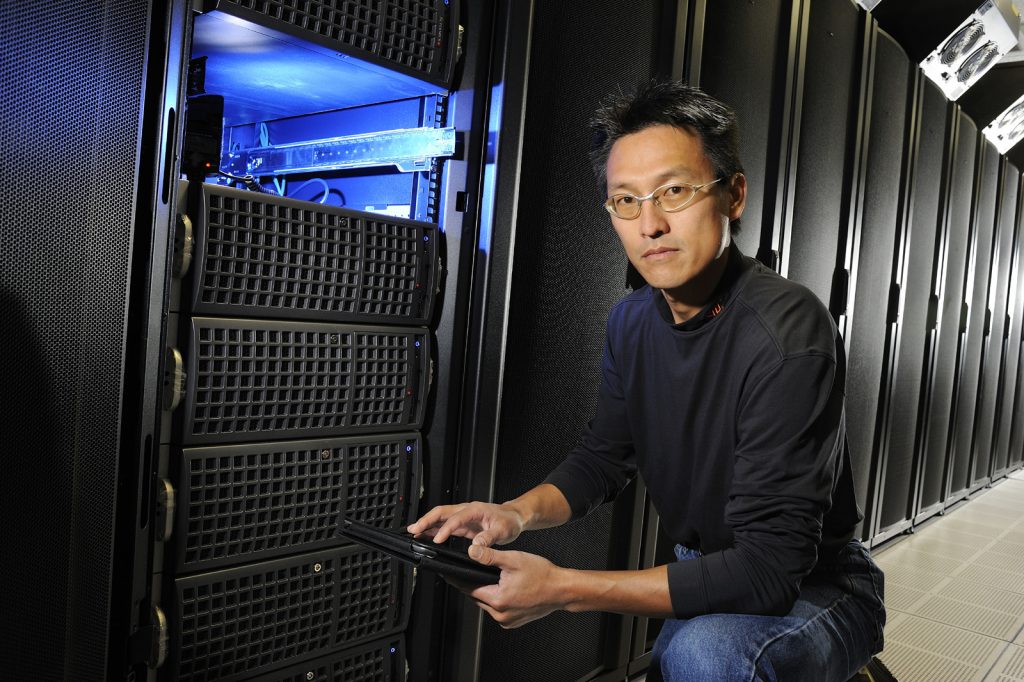

Cloud computing contributes to individually tailored medical treatments of the future

For Wu Feng, bridging medicine and computing seems natural. The award-winning Virginia Tech computer science professor who once pursued dual M.D. and Ph.D. degrees (but settled for only the latter) envisions a not-so-far-off future in which a secure cloud contains your genetic profile. That information could, in turn, lead to an early diagnosis of a potentially life-threatening disease – such as cancer – and an individually tailored life-saving treatment.

For now, this level of personalized medicine is science fiction. But Feng thinks that in a decade – or less – it could be real science.

To get to the point where technology can give people access to their genetic profiles, cloud computing plays a pivotal role. By putting resources to analyze genomes in the cloud, researchers can do their work from a variety of devices, collaborate with each other more easily and save time and money. Just a few years ago, sequencing a human genome, for example, used to cost $95 million. Now, it’s $1,000. And by 2020, it may be a matter of pennies.

Computing makes it possible to run simulations faster, which leads to more efficient lab work that could produce scientific breakthroughs. Feng and his team at Virginia Tech have developed tools to help other researchers and clinicians in their quests to find cures for cancer, lupus and other diseases.

“We’re helping them do the things they need to do to make the discoveries,” says Feng, a prolifically recognized professor in computer science and electrical and computer engineering at Virginia Tech, who won the first award from NVIDIA to “Compute the Cure” for cancer. “Many researchers, colleges and universities don’t have access to on-premises supercomputing resources to analyze large amounts of sequencing data. We can create the software they need, which they can then run on the Microsoft Cloud.”

Feng and his team leveraged a pre-existing next-generation sequencing toolkit (Genome Analysis Toolkit or GATK), made it run faster with new software, and “cloudified” it using the computing power and infrastructure of Microsoft’s Hadoop-based Azure HDInsight Service. The result is called SeqInCloud (as in “Sequencing in the Cloud”). Because of that work, biologists and oncologists can more quickly identify where mutations might occur that could lead to cancer.

“Rather than relying only on the wet lab to make their discoveries, they’re able to use validated computational models to simulate wet-lab processes in a fraction of the time,” says Feng, who says it can take minutes to sequence and analyze a genomic data set, when it used to take two weeks. “In short, while theory and experimentation have long been the two pillars of scientific inquiry and discovery, now more than ever, people view those two pillars of discovery and innovation as necessary still, but not sufficient. The third pillar is computing. Many of the experiments that we might otherwise do in a wet lab [for hands-on scientific research and experimentation] you can capture computationally and accelerate the process of discovery and innovation.”

His team also created a way to mix and match these tools through a workflow management tool called CloudFlow. While SeqInCloud is ready to debut as an open-source prototype, CloudFlow is still in development.

Feng has been in this space for a few years, as his team was one of only 13 in the U.S. chosen for a research program called Computing in the Cloud. The program, which was run by the National Science Foundation in partnership with Microsoft, “was designed to accelerate access to cloud computing for research discovery, data analysis and multidisciplinary collaboration,” he says.

“What if I had an instrument that could process blood — testing for cholesterol, for instance — and that information could go into my phone? Now, I’ve got this data on my phone. You have your genomic profile in the cloud, a nice, secure place. The doctor looks at it and says you’ve got high cholesterol,” says Feng. “But instead of throwing darts at the problem, trying something for six months to see what happens, what if he was able to simulate a reaction of encrypted drugs against your genetic makeup in the cloud? Now you’ve got a targeted treatment. Personalized medicine.”

Contrast this to our current day, when waiting for cholesterol test results can take up to two weeks, with treatments possibly requiring months of trial and error.

If Feng provides the toolkits for other scientists to unlock future breakthroughs, then the Virginia Bioinformatics Institute (VBI) at Virginia Tech are the toolmakers.

Bioinformatics began 20 years ago as genomics – the study of the genome – and has been greatly empowered by breakthrough algorithmic and computational methods, as well as constantly improving sequencing technology for data collection and faster computers for analysis. Sequencing technology evolved quickly, says Executive Director Christopher Barrett, and the cost per megabit “went through the floor.” He adds, just being able to rapidly sequence the genetic material has been a huge breakthrough, but that wouldn’t mean as much without data-centric, high-performance computation that enables interpretation and big improvements to basic storage of so much data. The Institute stands out from other organizations that relate sequence information to biological systems because its approach is holistic.

For instance, VBI’s methodology in studying infectious diseases doesn’t stop and start with the pathogen’s genome. They compute infection processes, the immune response to it, the disease’s biology, and even the social interactions and cultural environments among people, animals and insects – without which there is no epidemic at all.

“Bioinformatics is evolving into something I like to call integrative information biology,” says Barrett, who has most recently worked with the U.S. Department of Defense on Ebola.

Feng’s work syncs with VBI, which fields thousands of requests a year from biologists, microbiologists, ecologists, epidemiologists, urban planners and others from all over the world who don’t have the computational resources or expertise to process the massive amount of data now available in the field of genomics. For them, VBI provides Web-based tools such as those delivered to public health researchers and decision makers by the Network Dynamics Simulation Science Laboratory and the creation of Web services sites such as PATRIC, the Pathogenic bacteria bioinformatics Portal for the National Institutes of Health.

“Data-centric high performance computing allows us to understand these systems,” Barrett says. “The work we and Wu do, it’s different from studying, say, cancer. It’s studying things to study cancer with. If you don’t have a welder, you can’t weld.”

In his research on lupus at the Edward Via College of Osteopathic Medicine at Virginia Tech, Christopher Reilly uses VBI’s resources a few times a year to save months in the process that leads to the drug therapies that can substantially counter the inflammation characterized by the disease.

For him, the computing gets him to the lab faster, which can lead to breakthroughs in lupus treatment.

Fifty years ago, the five-year outcome for someone with lupus was a 50 percent death rate, Reilly says, but that has dropped down to less than 5 percent – due to the drugs now available to treat it and the research behind those therapies.

“I envision a day you can modify DNA,” Reilly says. “You could activate good genes and deactivate bad ones. Each person has their own genetic signature, and if you can predict the probability of lupus, then you can put that person on a specific type of therapy sooner. That’s pretty neat.”

These kinds of scientific leaps rely on speed – and that’s something Virginia Tech has in abundance, thanks to a supercomputer called HokieSpeed, created by Feng. He’s come a long way since his first computer, a Radio Shack TRS-80 Color Computer that his dad bought when he was in seventh grade “to keep us busy during the summer and out of my mom’s hair,” he laughs.

“We can use what we’re doing to test things on HokieSpeed, for example, and if it works here, at scale, we can hoist it up into the cloud to benefit biologists who may not have supercomputer resources like this,” Feng says. “People can realize HokieSpeed-like computing in the Microsoft Cloud.”

That data can then lead to visualizations that can help researchers do course corrections on the front end – “sanity checks” during simulations.

While the work of Feng, his Synergy Laboratory and his recently established SEEC Center currently has a strong focus on biology and bioinformatics, he can see applying his problem-solving process to other fields, such as cybersecurity — looking for certain code patterns the way his research looks at the letters in DNA sequencing. Feng isn’t afraid of breaking processes to make them better, and he isn’t afraid of asking “Why not?” when his proposals are met with skepticism.

“Suddenly, you’re opening doors to things you’ve only dreamed of,” says Feng.

Lead photo caption: Wu Feng, working with the HokieSpeed supercomputer at Virginia Tech.