How statistical noise is protecting your data privacy

Data helps us better understand our world. It underpins scientific advances, helps companies build better products and services and enables us to respond to societal problems. But it’s crucial to be able to use this data without compromising any sensitive or private information.

Differential privacy is a technology that allows the collection and sharing of data while safeguarding individual identities from being revealed. Other privacy techniques can be limiting and can result in sensitive information, such as bank details, becoming discoverable.

Differential privacy is the gold standard of privacy protection. Microsoft has created an open source differential privacy tool, providing the building blocks for researchers and data scientists to test new algorithms and techniques that enable data to be used more widely and securely.

How does differential privacy work?

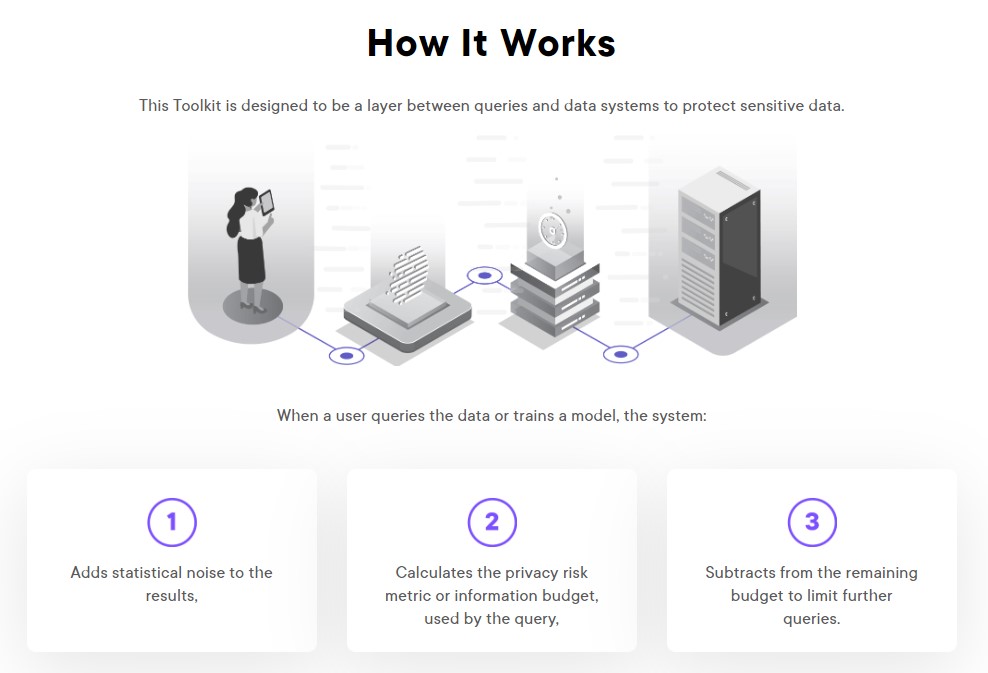

Differential privacy introduces statistical noise – slight alterations – to mask datasets. The noise hides identifiable characteristics of individuals, ensuring that the privacy of personal information is protected, but it’s small enough to not materially impact the accuracy of the answers extracted by analysts and researchers.

This precisely calculated noise can be added at the point of data collection or when the data is analyzed.

[READ MORE: Preserving privacy while addressing Covid-19]

Source: https://opendifferentialprivacy.github.io/#how-it-works

Before queries are permitted, a privacy “budget” is created, which sets limits on the amount of information that can be taken from the data. Each time a query is asked of the data, the amount of information revealed is deducted from the overall budget available. Once that budget has been used up and further information would then risk personal privacy being compromised, additional queries are prevented. It’s effectively an automatic shut-off that prevents the system from revealing too much information.

[READ MORE: GDPR’s first anniversary: A year of progress in privacy protection]

Why do we need differential privacy?

Existing anonymization methods have been proven to be open to breaches. With enough specific or unique information, an individual’s identity can be reverse-engineered from a dataset, even if that data had been anonymized. Differential privacy helps prevent such identification.

How is it being used?

Differential privacy as a concept was pioneered by Microsoft in 2006, and recent advances have meant that its use has grown substantially in the past few years.

At Microsoft, it is one of several efforts underway to protect privacy and it is already being used in several instances. For example, it helps us improve Windows by privately collecting end users’ usage data remotely. It protects LinkedIn members’ data while allowing advertisers to see audience insights and marketing analytics. And, along with AI, differential privacy helps improve workflow by suggesting replies to emails in Office.

Microsoft is committed to privacy and will continue to explore how open data, differential privacy and cybersecurity can help make our lives easier.

For more on how Microsoft protects your privacy, visit our privacy hub and follow @MSFTIssues on Twitter.