AI ‘for all’: How access to new models is advancing academic research, from astronomy to education

In early 2023, Professor Alice Oh and her colleagues at the Korea Advanced Institute of Science and Technology (KAIST) realized they needed to address the quickly growing interest in OpenAI ChatGPT among KAIST’s students.

ChatGPT – a tool developed by OpenAI that runs on large language models and generates conversational responses based on people’s prompts – could lead to students taking shortcuts in their work but might also offer educational benefits, they reasoned. The group wanted to develop a research project that would engage students in using the technology, so they began thinking about how to develop their own chat application.

“We were in a hurry,” says Oh, a professor in the KAIST School of Computing. “Our semester started in March, and we wanted to have students start using this right away when the semester started.”

A solution soon emerged. In April 2023, Microsoft Research launched an initiative that aims to accelerate the development and use of foundation models – large-scale AI models trained on vast amounts of data that can be used for a wide range of tasks.

Advancing Foundation Models Research (AFMR) provides academic researchers with access to state-of-the-art foundation models through Azure AI Services, with the goal of fostering a global AI research community and offering robust, trustworthy models that help further research in disciplines ranging from scientific discovery and education to healthcare, multicultural empowerment, legal work and design.

The initiative’s grant program includes 200 projects at universities in 15 countries, spanning a broad range of focus areas. Researchers at Boston’s Northeastern University are working on an AI-powered assistant designed to appear empathetic toward workers’ well-being. At Ho Chi Minh City University of Technology in Vietnam, researchers plan to create a fine-tuned large language model (LLM) specifically for Vietnamese. In Canada, researchers at the Université de Montréal are exploring how LLMs could help with molecular design and the discovery of new drugs.

Accessing foundation models can be challenging for academic researchers, who must often wait to use shared resources that can lack the computing power needed to run large models. Microsoft Research created the initiative to give researchers access to a range of powerful foundation models available through Azure and ensure that the development of AI is driven not just by industry, but also by the academic research community.

“We realized that to develop AI today, there is really a need for industry to open up capacity for academia,” says Evelyne Viegas, senior director of Research Catalyst at Microsoft Research. “Those different viewpoints could shape what we’re doing.”

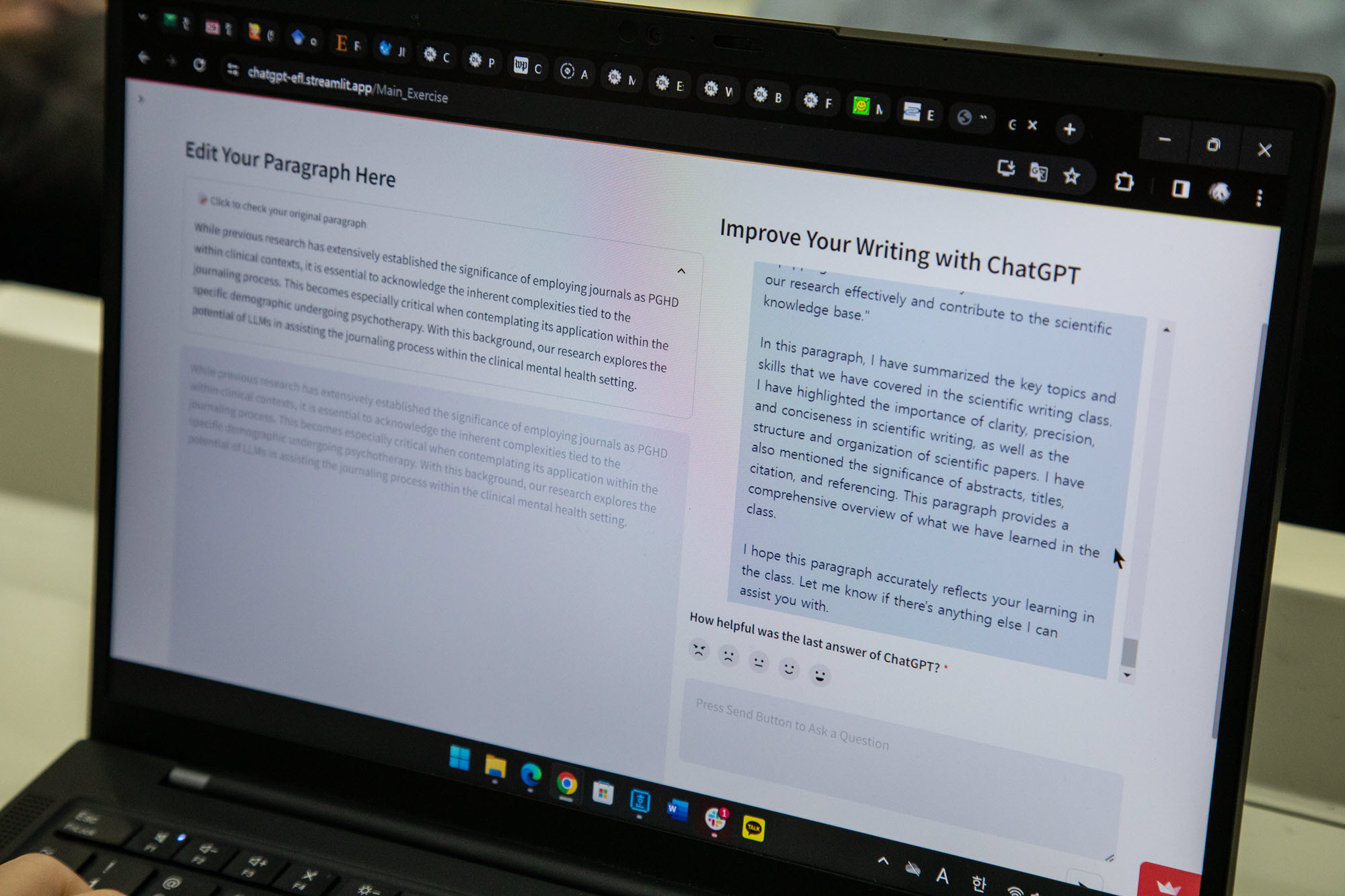

With access to Azure OpenAI Service, which combines cutting-edge models from OpenAI with security, privacy and responsible AI protections offered in Azure, Oh and the KAIST team developed a platform that uses the models underlying ChatGPT for a chatbot to help college students write essays for English as a Foreign Language (EFL) courses. Students often write at night, when guidance from professors or teaching assistants isn’t available, Oh says, and EFL students frequently use tools to help navigate the challenges of writing in English.

Oh’s team designed the chatbot to answer students’ questions but not write their essays for them. Over a semester, 213 EFL students used the tool to refine their essays; the platform collected the students’ questions and essay revisions they made based on the chatbot’s responses, then Oh’s team analyzed the data and published a paper about the experiment.

The researchers found that some students used the platform extensively and incorporated the feedback it provided. Many treated the chatbot like an “intelligent peer,” Oh says, suggesting that the technology can be a helpful complement to classroom instruction. And since the platform uses GPT-4, a large multimodal model developed by OpenAI that can communicate in multiple languages, students sometimes switched between English and their native language when using the platform, enabling more natural interactions.

The KAIST team plans to expand the platform to creative writing and conversational English classes. Oh sees tremendous potential for generative AI in education, particularly if models can be trained to show students how to reason through problems rather than simply providing answers.

“Universities should take full advantage of this and really start to think about how we can use these tools for scientific research and education,” she says.

‘Like having a super adviser’

Researchers at North Carolina Agricultural and Technical State University, who received a grant under the Microsoft program, are developing an AI-based traffic monitoring system capable of identifying road congestion and safety hazards. The project is aimed at automating much of the manual work required by traditional traffic monitoring systems.

The researchers used GPT-4 alongside other AI models that rely on traffic data collected by the federal government to analyze traffic patterns and congestion. Users interact with the system through a chatbot and can ask questions about current traffic conditions – like how busy traffic is at a particular location or the speed at which vehicles are traveling.

“It will make traffic management easier and more efficient,” says Tewodros Gebre, a Ph.D. student working on the project.

The system uses GPT-4 to interpret traffic data collected from sensors, drones and GPS, allowing transportation agencies, city planners and citizens who aren’t necessarily data scientists to quickly get information about traffic conditions through the chat application.

“We talk about data equity, and this combination with the chatbot makes the system available to people without them needing to go to this complex model and see what’s going on,” says Leila Hashemi-Beni, an associate professor in geospatial and remote sensing at the university. “People with different skill sets can still get the information they need from this system.”

The system, which is still in development, could also help identify the best evacuation routes after a natural disaster, she says.

“It’s not just transportation. This project has much bigger, broader impact. It gives us the opportunity for cutting-edge research that is very helpful to us as researchers and educators.”

A collaboration between astronomers at Harvard University and The Australian National University is leveraging GPT-4 in a different way. Seeking to use LLMs to accelerate astronomy research, the group, called UniverseTBD, developed an astronomy-based chat application that draws from more than 300,000 astronomy papers.

Alyssa Goodman, the Robert Wheeler Wilson Professor of Applied Astronomy at Harvard, says the application could eventually help young astronomers extract key information from academic papers and analyze data to develop their own research and theories.

“If you have a really good idea, it’s very hard to just search the literature and try to find everything,” Goodman says. “This is sort of like having a super adviser, a brilliant astronomer with an encyclopedic memory who can say, ‘Well, that could be a very good idea and here’s why,’ or ‘That’s likely a bad idea and here’s why.’”

The researchers hope to develop smaller language models for astronomy that will be accessible to astronomers of all levels, says Ioana Ciucă, the Jubilee Joint Fellow at The Australian National University leading UniverseTBD with Sandor Kruk, a data scientist at the European Space Agency, and Kartheik Iyer, a NASA Hubble Fellow at Columbia University.

“Our mission is to democratize science for everyone,” she says. “GPT-4 is a very large language model and it runs on a lot of resources. In our pursuit of democratizing access, we want to build smaller models that learn from GPT-4 and can also learn to speak the language of astronomy better than GPT-4. That’s what we’re envisioning.”

Many of the AFMR research projects focus on using LLMs for a range of societal benefits, from leveraging generative AI to assess pandemic risk to using vision and language models to help people who are blind or have low vision navigate outdoors.

At the University of Oxford, researchers are studying how to make LLMs more diverse by broadening the human feedback that informs them. The researchers reviewed 95 academic papers and found that human feedback used to tailor and evaluate the outputs of LLMs traditionally comes from small groups of people that don’t necessarily represent the larger population. Consequently, researcher Hannah Rose Kirk says, LLMs become tailored to the preferences and values of “an incredibly narrow subset of the population that ends up using those models.”

That can mean, for example, that if you ask a language model to help plan a wedding, you’re likely to get information about a stereotypical Western wedding – big white dress, roses and the like (this finding was part of a 2021 study).

“Imagine a model that could learn a bit more from an individual or sociocultural context and adapt its assistance to helping you plan your wedding, or at least to know that weddings look different for different people – it should ask a follow-up question to work out which path to go down,” says Kirk, a Ph.D. student at Oxford who researches LLMs.

For their AFMR project, Kirk and Scott Hale, an Oxford associate professor and senior research fellow at the Oxford Internet Institute, surveyed 1,500 people from 75 countries about how often they use language models and what characteristics of those generative AI models – such as reflecting their values or being factual and honest – are important to them. The study participants then had a series of real-time conversations with a variety of generative AI models and rated their outputs.

With Azure capabilities, Kirk and Hale will use the feedback and conversations of participants from around the world to train and fine-tune language models to make them more diverse. They plan to make their dataset available to other researchers and hope the work will encourage more inclusive language models.

“People don’t want to feel like this technology was made for others and not for me,” Hale says. “In terms of the utility that someone can receive from it – if they can use it as a conversational agent to reason through something or make a decision, to look something up or access content – that benefit should be equally distributed across society.”

“When designing generative AI models, having more people at the table, having more perspectives represented ultimately leads to better technology, and it leads to technology that will raise everyone up,” he says.

‘A catalyst for global change’

After OpenAI released ChatGPT in November 2022, Viegas says, Microsoft Research wanted to understand how academic researchers might use and benefit from generative AI. The organization saw an opportunity to create a global network of academic researchers focused on evolving foundation models to better align AI with human goals, advance beneficial uses of AI and further scientific discovery. The AFMR program was also designed to support a series of voluntary commitments drafted by the Biden administration to foster trustworthy and secure AI systems, Viegas said.

The grant program provides access to models and customizable APIs through Azure AI Services, which take advantage of Azure’s built-in security and compliance protocols that enable research in sensitive areas such as healthcare. The initiative also gives participants the opportunity to meet and learn from each other at conferences and virtual events, and to work with Microsoft Research scientists.

“It’s one thing to have access to the resources, but it’s another thing to accelerate your own research by seeing what others are doing,” Viegas says.

Ultimately, she says, the initiative aims to foster a global and diverse research community and apply AI for the greater good.

“We want to make sure that AI isn’t just for the few. Our aspiration is for AI to become a catalyst for global change, for the benefit of all,” she says.

Related links:

- Learn more: Microsoft’s Accelerating Foundation Models Research grant program

- Learn more: The Prism Alignment Project: Participatory, Representative and Individualised feedback for Subjective and Multicultural Alignment

- Read more: AstroLLaMA-Chat: Scaling AstroLLaMA with Conversational and Diverse Datasets

Top image: Alice Oh, a professor in the KAIST School of Computing, is among academic researchers in 15 countries around the world to receive a grant through a new Microsoft Research initiative. Photo by Jean Chung for Microsoft.