Microsoft unveils a smartphone app in Japan, featuring Rinna the chatbot with a combination of powerful new AI technologies

Artificial intelligence (AI) that can see and comment on the world around us will soon be interacting much more naturally with people in their daily lives thanks to a powerful combination of new technologies being trialed in Japan through a chatty smartphone app.

The app features Microsoft Japan’s hugely popular Rinna social chatbot. It was unveiled at the Microsoft Tech Summit 2018 in Tokyo on Monday and is still in its developmental stage.

The AI behind the app has enhanced sight, hearing, and speech capabilities to recognize and talk about objects it sees in ways that are similar to how a person would. As such, it represents a significant step towards a future of natural interactions between AI and people. At the heart of the app is the “Empathy Vision Model,” which combines conventional AI image recognition technology emotional responses.

With this technology, Rinna views her surrounding through a smartphone’s camera. She not only recognizes objects and people, she can also describe and comment verbally about them in realtime. Using natural language processing, speech recognition, and speech synthesis technologies – developed by scientists at Microsoft Research – she can engage in natural-like conversations with a phone’s human user.

“A user can hold their smartphone in their hand or place it in a breast pocket while walking around. With the camera switched on, Rinna can see the same scenery, people, and objects as the user and it talk about all that with the user,” Microsoft Japan President Takuya Hirano said.

Unlike other AI vision models, Rinna can describe her impressions of what she is viewing with feeling, rather than just listing off recognition results such as the names, shapes, and colors of the things she sees. Rinna on a smartphone can view the world from the same perspective as a user and can converse with that user about it.

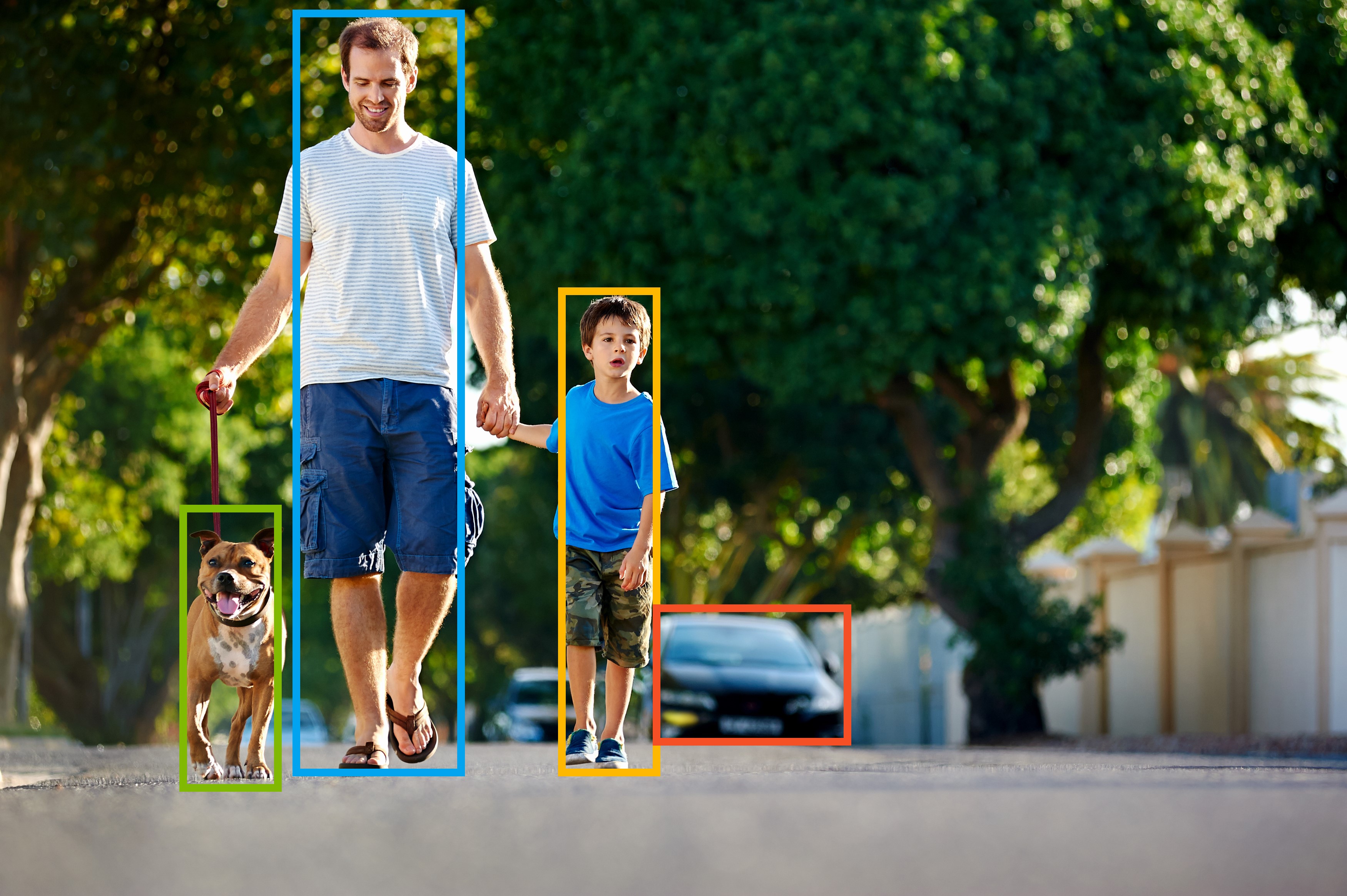

Let’s take the following image to help illustrate the difference:

Conventional AI vision technology might typically react this way: “I see people. I see a child. I see a dog. I see a car.”

In contrast, Rinna with the Empathy Vision Model might say: “Wow, nice family! Enjoying the weekend, maybe? Oh, there’s a car coming! Watch out!”

As well as the Empathy Vision Model, which generates empathetic comments in real time about what the AI sees, Rinna’s smartphone app also features other cutting-edge features, including “full duplex.” This enables AI to participate in telephone-like natural conversations with a person by anticipating what that person might say next.

This capability helps Rinna make decisions about how and when to respond to someone who is chatting with her, a skill set that is very natural to people, but not common in chatbots. It differs from “half duplex,” which is more like the walkie-talkie experience in which only one party to a conversation can talk at any one time. Full duplex reduces the unnatural lag time that can sometimes make interactions between a person and a with chatbots feel awkward or forced.

Rinna’s smartphone app also incorporates Empathy Chat, which aids independent thinking by the AI. This helps keep a conversation with the user going as long as possible. In other words, the AI selects and uses responses most likely to encourage a person to keep engaged and talking.

It is still in its development stage and the timing of its general release has not been set. But the voice chat function is available as “Voice Chat with Rinna” on Rinna’s official LINE account in Japan.