At The University of Hong Kong, a full embrace of generative AI shakes up academia

Hong Kong – Leon Lei, who teaches data science in the faculty of education at The University of Hong Kong (HKU), recently produced a textbook – in 30 hours.

Using a mix of generative AI and other tools, Lei turned transcripts and slides from a series of online classes he taught during pandemic lockdowns into text (15 hours), then edited and compiled it into a 10,000-word course book (another 15 hours). He also converted chapters to mind maps – diagrams which show concepts in visual form – and created video clips.

“Students have diverse learning styles,” said Lei, who is running AI clinics across the university on using AI tools for teaching. “Some want to listen, watch. Some want a mind map first. Before this, I didn’t have time to explore.”

Generative AI is causing teachers to rethink how they teach and how they can prepare students for the future. Administrators are reframing what universities should be teaching that future employers will want.

“When did we last have this kind of shake-up?” said Pauline Chiu, associate vice president for teaching and learning at HKU. “We’ve got the attention of teachers, parents, students. It’s an opportunity to reinvent our teaching. Now that’s a big positive.”

Generative AI tools – built on large language models (LLMs) that synthesize massive troves of data to generate text, code, images and more – are seen as the biggest technological leap since the web browser and smart phones. But while the technology is powerful, it can deliver imperfect results and learning institutions have been grappling in the last few months over how to deploy it responsibly – if at all.

The advent of generative AI is raising bigger questions on what sort of future universities should be preparing students for. “What should we be teaching in university alongside it? What do future employees need? What kind of other human skills do we need to be teaching? How do you collaborate with other human beings, what about relationship building?” said Chiu.

HKU, a research-led comprehensive university founded in 1911, is the oldest university in Hong Kong and known for its medical school. It’s ranked as the top university in Hong Kong and 35th globally, according to the Times Higher Education World University Rankings 2024.

HKU initially instituted a ban earlier in the year on using generative AI tools. “We knew it was temporary,” said Chiu. In February, a task force made up of staff, students and technologists began meeting weekly to discuss the implications of the new technology.

When Microsoft Azure OpenAI Service, powered by OpenAI’s GPT, became generally available in January, the university’s IT department acted first. “We said AI is the future,” said Flora Ng, chief information officer and university librarian. “It is what we need to pursue to enhance research, teaching and learning.”

HKU was already using Microsoft’s solutions and the IT department made an unusual decision to go ahead and fund Azure OpenAI Service to staff only from April to June, so they could test it out and understand the impact of generative AI. Usually, new IT funding involves tender documents with detailed requirements and buy-in from various departments – which can take months.

In this case, “nobody knew what the total requirements should be,” said Ng. “Our IT department said we will take a risk; we’re going to fund it. The strategy for me was to quickly adopt, then if it fails, to pivot.”

In June, the HKU senate officially endorsed a generative AI policy that established it as a “fifth literacy” for students, alongside oral, written, visual and digital literacy. At the end of August, HKU and seven other universities in Hong Kong announced they were making Azure OpenAI Service available to all staff and students, with few restrictions, when the new school semester started in September.

“Our stance is to embrace it,” said Chiu, adding that it’s up to teachers if they wanted to limit use for their courses or certain assignments.

“There may be situations in which we want students to learn the basics by limiting the use of generative AI,” said Chiu. “But it will be up to the teachers to make that decision.”

The university has rolled out several generative AI chatbots, built with Azure OpenAI Service. An IT helpdesk chatbot answers simple queries, freeing staff up to deal with more complicated issues. Another chatbot deals with administrative questions, such as how to sign up for a course, and another one on undergraduate course selection.

Staff and students can also access a more general HKU chatbot for teaching and learning.

Early usage statistics have been encouraging. In the first 20 days, since it was launched on September 1, more than 10 percent of the student population of 36,400 have used the general HKU chatbot. About 17 percent of the staff population of 13,100 have done the same. The IT helpdesk chatbot in turn received 1,276 inquiries between August 21 and September 13.

To protect user data and privacy, the chatbots do not keep any data on queries. “We don’t look at what they ask. We don’t keep any records,” said Ng.

Students are taught basic generative AI literacy in AI workshops run by HKU’s Teaching and Learning Innovation Centre. They learn that results are not always perfect, but that the HKU chatbot can be a good idea generator. Nor is it a search engine; it’s a language synthesizer. They are told to always check original sources for accuracy. And so on.

The big concern, of course, is that students become too reliant on generative AI to complete assignments without really understanding the materials. However, HKU teachers will not be relying on AI detection tools because they aren’t accurate or reliable today. There is a possibility of false negatives and false positives, which could lead to a student being wrongly accused of cheating.

Instead, teachers are asked to reinvent assessment, said Cecilia Chan, director of HKU’s Teaching and Learning Innovation Centre. “Exactly what do we want the student to learn?” said Chan. “Think about the learning process, outcomes and experience, that is what is important.”

A teacher could, for example, ask for a skills demonstration or an oral presentation instead of an essay. Or they could ask the chatbot to generate a number of essays and ask students to critique them. Are there factual errors? Students could add their opinions and maybe generate an essay plan. It’s a sort of reverse engineering of an essay, where “you can still have all the learning objectives of an essay,” said Chan.

A student could be asked to demonstrate competency through hands-on work at different stations like in the medical school’s Objective Structured Clinical Exam, stuff that AI cannot do.

Chan said she herself uses generative AI tools “like a personal assistant,” including to answer the many emails she gets asking her for interviews and to speak at conferences. To those who worry about relying on it too much, she offers a comparison.

“Can you imagine life without one of these?” she asks, waving her smartphone. “That’s what we are getting used to.”

Students are already figuring all this out for themselves.

Lai Yan Ying, also known as Cheri, is a fourth-year student majoring in linguistics. She said she wouldn’t use it for writing an essay but thinks it’s fair to use it to generate ideas, such as questions for recent research project where she interviewed someone about their experience learning English.

“I don’t think we can just use ChatGPT for everything,” said Lai. “Sometimes, I just prefer to go to a library and grab a book.”

For Yan Wing Lam, a fourth-year engineering major, generative AI is less of a mind shift, “In engineering, we are quite into AI already. It’s just like a tool to me.”

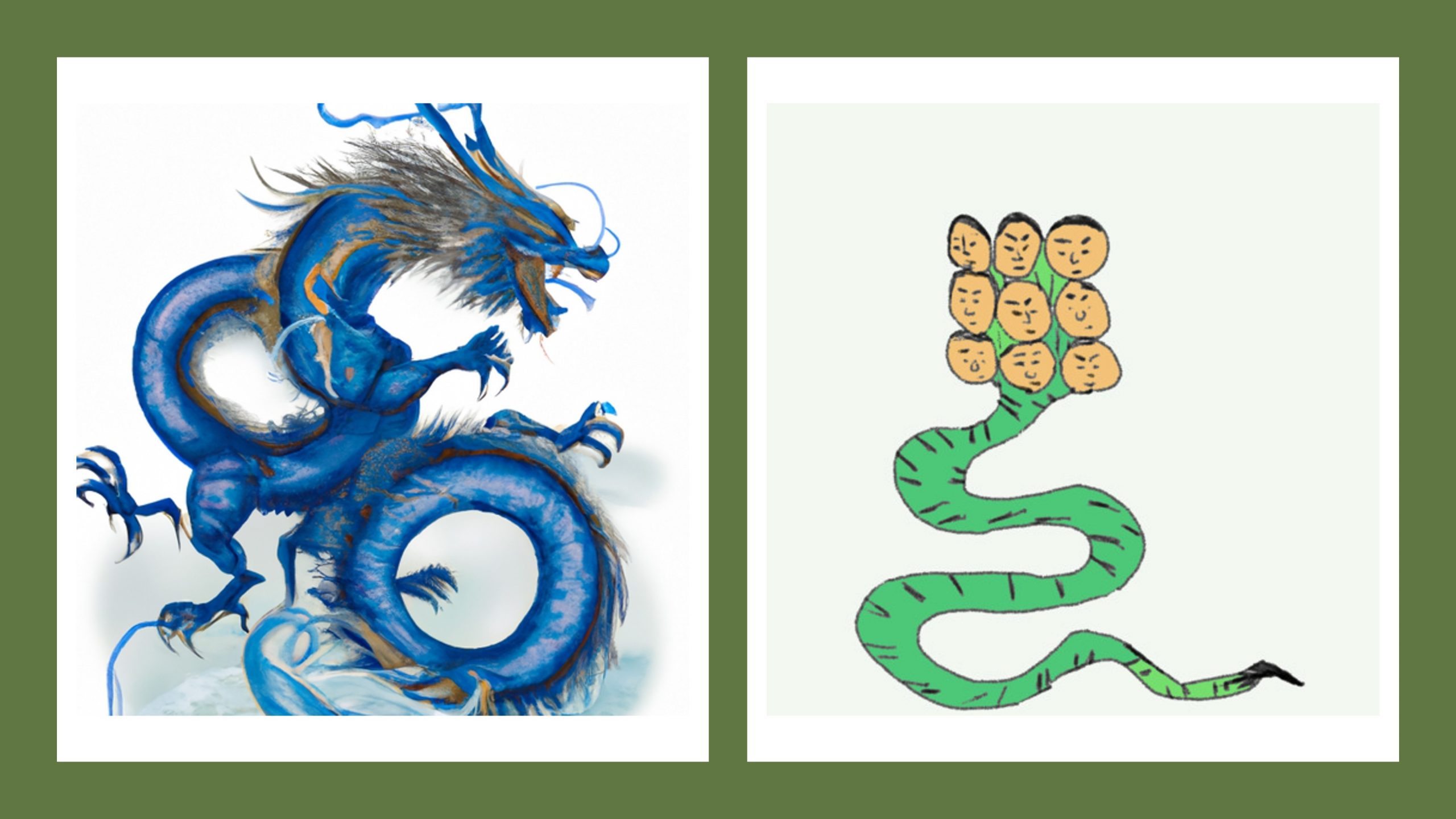

When Lai and Yan both worked on a recent project together, they encountered both the promise and the limitations of the tool. For a course called Digitizing Cultural Heritage in Greater China, they decided to use OpenAI’s DALL·E 3 image generator via Azure OpenAI Service on the HKU chatbot to create pictures of Chinese mythical creatures – with their instructor’s blessings.

They were only partially successful.

A prompt for “blue dragon with horns and claws” successfully brought forth a picture of Qing Long, an azure dragon god in Chinese mythology. However, it took a few tries to generate a usable picture of Chi Ru, a fish with a human face.

Attempts to conjure up a likeness of Xiang Liu, a nine-headed monster snake, kept returning pictures of single-headed snakes. Lai ended up making her own digital drawing of Xiang Liu, which took about half an hour, versus just seconds using DALL·E 3.

The four-person team got an A on the project.

Top image: Leon Lei, who teaches data science in the faculty of education, is using generative AI tools to create mind maps and short videos for students with different learning styles. Photo by Lam Hei Chun for Microsoft.