Technology, ethics and the law: Grappling with our AI-powered future

Digital technology is advancing every day. The vast computational power of the cloud and an immense accumulation of data have come together. Artificial intelligence (AI) is growing all around us and computers will behave more and more like humans.

How will this global transformation affect individuals and society? And, what laws should be enacted to guide the march of progress in a digital world?

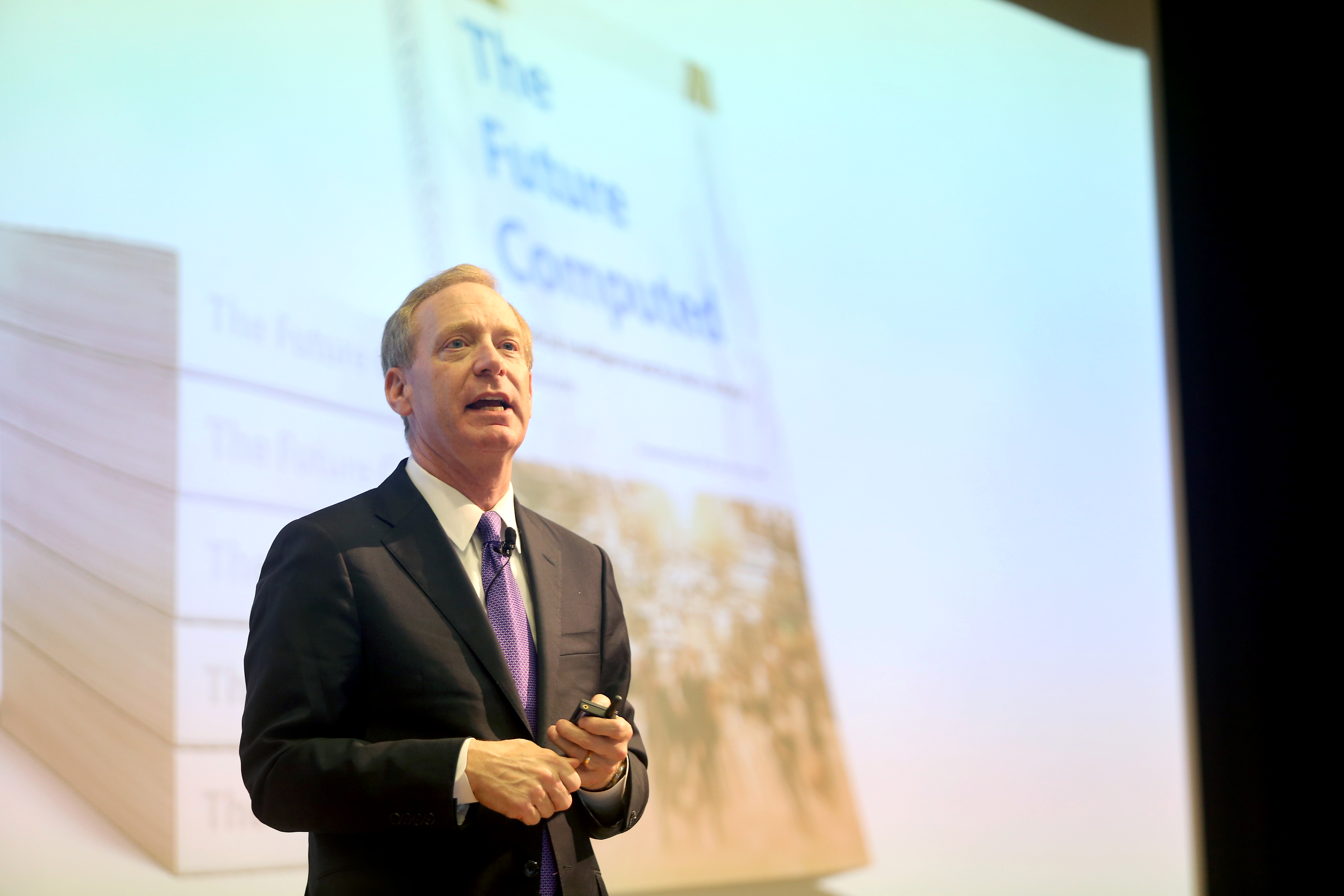

For Brad Smith, the President of Microsoft, it starts with asking a fundamental ethical question about “not just what computers can do, but also what they should do.”

“That is the question every community, and every country around the world, is going to need to ask itself over the course of the next decade,” he told lawyers, policymakers, and academics during a visit to Singapore – a city-state with an impressive track record of embracing new technologies and innovation.

While developers, quite rightly, get excited about the ground-breaking products they are creating, “we cannot afford to look at the future with uncritical eyes,” he warned.

As computers start to act more like humans, there will be societal challenges. “We not only need a technology vision for AI, we need an ethical vision for AI,” he said.

These ethical issues should not just be the focus of “engineers and tech companies … In effect, they are for everybody” because growing numbers of people and organizations are creating their own AI systems using the technological “building blocks” that companies, like Microsoft, produce.

Smith – who spoke at the TechLaw.Fest conference and the Lee Kuan Yew School of Public Policy at the National University of Singapore – said the Southeast Asian hub city “is a great place to see the future and just how broadly dispersed the creation of AI is and will become.”

Earlier this year Smith and Harry Shum, Executive Vice President of Microsoft AI and Research Group, co-authored The Future Computed: Artificial Intelligence and its role in society. And during his Singapore visit, Smith explored six key ethical principles to consider.

Fairness. “What does it mean to create technology that starts to make decisions like human beings? Will the computers be fair? Or will the computers discriminate in ways that lawyers, governments, and regulators will find to be unlawful? … If the dataset is biased then the AI system will be biased.” Part of this is an urgent need to fix a current lack of diversity in today’s male-dominated tech sector.

Reliability and Safety. Most of today’s product liability laws and norms are based on the impact of technologies invented a century or more ago. These will need to evolve to address the rise of computers and AI. “People need to understand not only what AI can do, but also the limits of what AI can do.” This will ensure that humans are in the loop and there is extensive testing.

Privacy and Security. “As we read about the issues in the news, and we think about where AI and other information technologies are going, there are few issues that are more important. We will have to start by applying the privacy laws that exist today, but then think about the gaps in these legal rules … so people can manage their data, so we can design systems to protect against bad actors and ensure responsible data use.”

We not only need a technology vision for AI, we need an ethical vision for AI.

Inclusiveness. “Many people have a disability. AI-based systems will either make their day better or make their day worse. It all depends on whether the people who are designing AI systems are designing them with their needs in mind.”

All four of the areas above rely on the next two points:

Transparency. “There is a doctrine of ‘explainability’ rapidly emerging on the AI field.” In other words, the people who create AI systems have a responsibility to ensure that those who use, or who are impacted by, those systems know “how the algorithms actually work. And that is going to be a very complicated issue.”

Finally, there is the “ultimate bedrock principle” of Accountability. “As we think about empowering computers to make more decisions, we fundamentally need to ensure that computers remain accountable to people. And, we need to make sure that the people who design these computers and these systems remain accountable to other people and the rest of society.”

These six areas all complement each other. But even so “they don’t quite do everything that I believe the world will need.”

“That’s why we have started to call for the creation of, in effect, a Hippocratic Oath for coders, like the one we have for doctors – a principle that calls on the people who design these systems to ensure that AI (will) ‘do no harm’.”

Smith said the release of his book with Shum has sparked a global conversation. “We have found that it’s getting students around the world to get together and design different versions of this oath and that is exactly what the world needs to engage people in defining for themselves an ethical future.”

Ultimately, however, it will not be enough just for the world to agree on ethics. “Because if we have a consensus on ethics and that is the only thing we do, then what we are going to find is that only ethical people will design AI systems ethically. That will not be good enough … We need to take these principles and put them into law.

“Only by creating a future where AI law is as important a decade from now as, say, privacy law is today, will we ensure that we live in a world where people can have confidence that computers are making decisions in ethical ways.”

Meanwhile, the range of skillsets needed by technology companies is broadening. “Science, technology, engineering, and math (STEM) will be more important than ever. But as computers behave more like humans, the social sciences, the humanities, will become much more important to the creation of technology than they have been in recent years.”

ALSO READ: A new IP strategy for a new era of shared innovation

DOWNLOAD: New Report. Lawyers – agents for change in a world of digital transformation